David Liu | Elizabeth Major

Under typical sparring matches, the judge system involves one main judge, who follows the partcipants, and four corner judges. Problems with the judges are potential miscalls for determining hits or possibly even bias in those calls. The Sparring Point Detector project utilizes a camera with computer vision to perform this judging process, removing the aforementioned problems. At the start of a match, the program detects the gloves of both participants and overlays bounding boxes around them. During the match, these gloves are tracked to determine a collision between a glove and a torso. When a collision is detected, the participant who landed the hit is awarded a point and a round ends. Once a participant reaches the required number of points, the match ends.

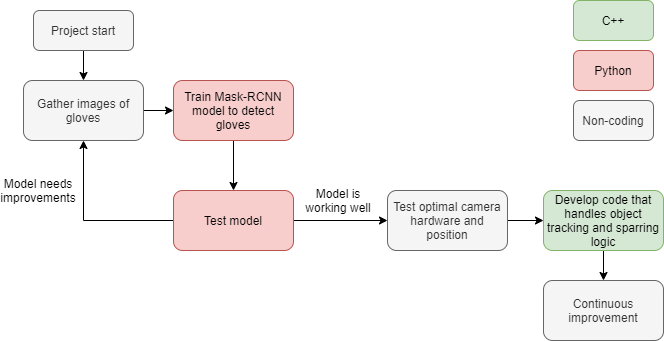

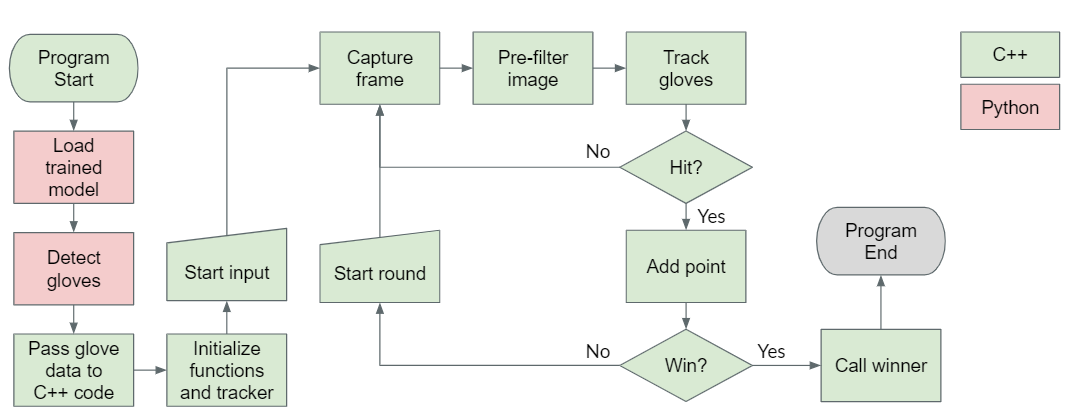

There are two main components to this project: machine learning and computer vision. The machine learning is used to teach the program to look for gloves and assign bounding boxes to them, while the computer vision is used to track the gloves and determine when to award points. The machine learning process is performed through Python, while image processing and computer vision are handled through C++. Below is a diagram of the steps performed during this project and an explanation of each section.

To start, images of sparring gloves were gathered to serve as examples of what gloves should look like when training the model. In total, 209 images were gathered, which were generously provided by Action Martial Arts. Using an open-source program called makesense.ai, the coordinates for the bounding boxes of each glove in each image were extracted and stored in xml files. The image below is an example of getting bounding boxes using makesense.ai.

The images and xml files that were gathered in the previous step are used by the created Python script to train a Mask-RCNN model. To understand how to train a model with this method, we first followed the tutorial found here that provided steps for training a model to detect kangaroos. In summary, Mask-RCNN allows for models to be trained using transfer learning, which is more efficient and uses less images than training an object detection model from scratch. It does this by loading the weights of an existing model, specifically the weights of the COCO dataset, then passing the images and their respective bounding box information. It then used the existing weights with the training information to create a new model that detects the object it was trained for.

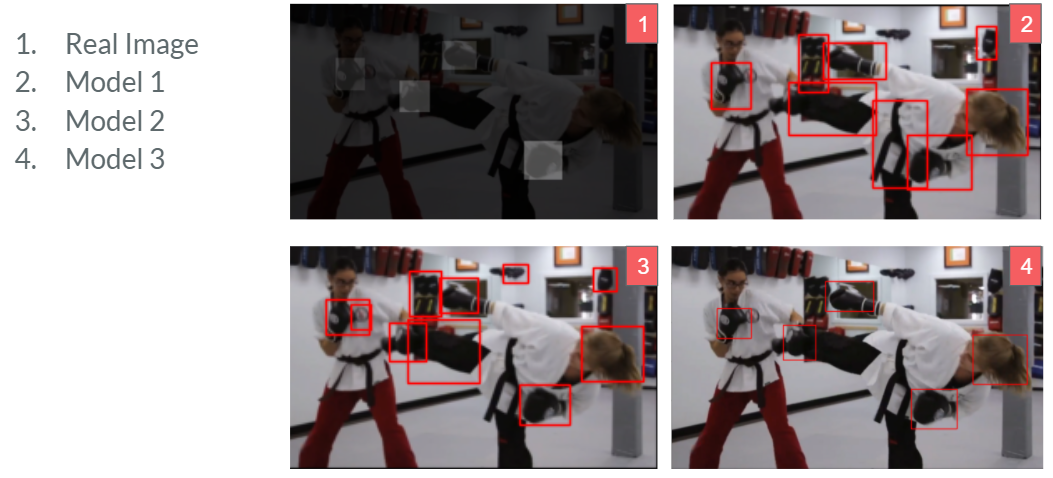

Once a model was created, we executed some Python code to test its performance. Based on this testing, we then improved the model by updating the images used for testing as well as the placement of the bounding boxes. Below is an image showing the results of different iterations of the model trying to find gloves in the same image. We can see that the more the model was fine tuned, the more accurate it became at creating the bounding boxes. As such, we continued gathering images, training the model and testing it until we became satisfied with its performance.

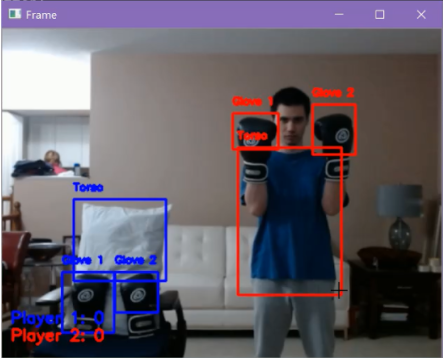

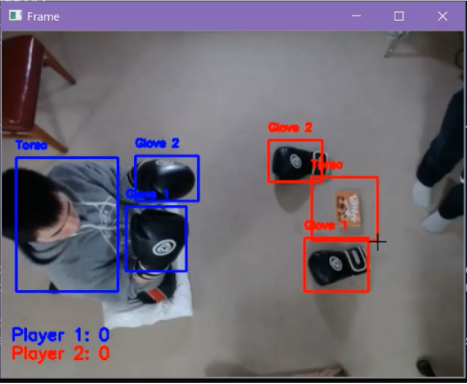

With the desired model created, we began testing different camera angles to determine the best set up for our project. To the right is an example of a straight-on view compared to a top down view. We found that the top-down view provided better tracking for the program as there was less occlusion and background interference from this position.

We also started the project using the webcam built into the computer laptop, then obtained a Logitech C920 USB camera. This camera provided better picture quality, but the video received was too slow. As such, we decided to go with the Grasshopper USB3 camera by FLIR. We selected this camera due to its very high framerate, as this improves the ability for the tracker to calculate the position of the gloves on each frame. To the right is an example of the initial setup with the Logitech camera compared to the final setup with the Grasshopper camera.

Using the model created and the camera set-up, we began developing the C++ code which handles the object tracking and sparring logic. However, since the model was created using Python and no equivalent to load it was found for C++, the C++ code needs to call a Python function. This function loads the model, locates the gloves in a frame, and returns the bounding box coordinates to the C++ program. The C++ program then uses these coordinates to track the gloves and determine when points should be given. It handles the overall flow of the sparring match, including ending the match when a person reaches 5 points and wins. Below is a flowchart showing the overall flow of the program:

Over the course of the project, many suggestions for improvement were implemented, and improvements to the project continued to be made up until the end of the term. These suggestions significantly improved the project's performance and quality.

Below is a video showing the evolution of the sparring point detector's capabilities.